Over the last 17 years three little letters have instilled fear in every engineering team I’ve worked with: SLA, or Service Level Agreement. An SLA is simply a commitment from a team that owns a service, promising it will be available for a specified percentage of the time. But teams consistently shy away from making these commitments – even when they are forgiving.

In this blog, I’ll explore the hidden costs of avoiding SLAs and the dangers of over-engineering for unattainable uptime. I’ll look at how a shift in mindset can help teams become more comfortable with being measured and some practical steps that can improve both service reliability and user satisfaction. Read on to discover how a more mature approach to SLAs can transform the way we deliver services and better serve the British public.

SLA avoidance

Historically, SLAs have been used as a commercial stick to penalise teams that don’t meet their commitments. Financial penalties for failing to meet an SLA have conditioned teams to either avoid them or insist on SLAs that don’t truly reflect service availability. Instead, teams fall back on metrics like bug report response times or processing a set number of tasks – neither of which benefits the users or adds business value.

TIP: Change the perspective

View SLAs as tools for improvement, not punishment. Changing the culture and mindset of service teams will help them focus on quality and user trust.

Are we overspending for uptime?

When there’s no SLA, the idea that a service should have 100% uptime fills the vacuum. But aiming for 100% availability often results in excessive redundancy, such as running 3 servers instead of 2. Cloud providers are more than happy to charge for extra infrastructure, even if there is no need. Does the value of the service really justify tripling the cost? And what’s worse, redundancy doesn’t always guarantee uptime. A recent outage for a cloud provider across 2 of its UK data centres, meant that the rush to its third data centre overloaded capacity, causing widespread failures.

Moreover, redundancy doesn’t just increase costs linearly. For example, running servers across multiple data centres incurs fees for data transfer between locations. I once worked in a team that ran a database across 3 data centres, but the constant data shuffling between them to ensure redundancy, added around £80k in unnecessary costs in just one month and when 2 of the data centres went down, there weren’t enough specialist servers in the remaining data centre to keep the system operational.

TIP: Evaluate redundancy needs

Conduct a thorough analysis of service requirements to work out the appropriate redundancy levels. Teams should do a cost-benefit analysis to make sure that the financial investment aligns with the expected return in service reliability.

The cost of over-engineering

When teams are pressured to achieve perfect reliability, they often over-engineer their systems regardless of whether there is a business need. This complexity comes with high operational costs, requiring more staff to maintain. The additional complexity also introduces additional failure modes, ironically making outages more likely. .

Over-optimising reliability can also lead to opportunity cost. Teams may spend months optimising for rare outages when they could instead be building new features, improving user experience, or reducing costs. For example, a government team once spent 6 months developing a process to avoid more than 5 seconds of downtime during a database migration. The money spent on this could have been used for more impactful work elsewhere, or at a more fundamental level could have been spent elsewhere such as hiring additional nurses for the NHS.

TIP: Look at cost versus benefit

Discuss service costs in relatable terms, such as how many nurses could be hired with the budget. This helps clarify the trade-offs between cost and reliability.

The security risks of avoiding updates

As services mature, they generally become more reliable. Bugs that cause early issues are fixed over time. However, as services mature the biggest risk to service reliability becomes changes to the system. Teams aiming for an unobtainable 100% uptime may consciously avoid making necessary updates or implement overly elaborate change management processes. This can be dangerous.

Many modern web services rely on dozens, even hundreds, of third-party libraries – each one a potential source of security vulnerabilities. These need regular updates, but teams often fear making changes that could cause downtime. The result? Outdated services with a plethora of small vulnerabilities that build up over time. If these vulnerabilities align known as swiss cheese vulnerabilities, the risk of a security breach becomes more severe. Further, services that avoid updates can become less relevant to users as they fail to meet their evolving needs.

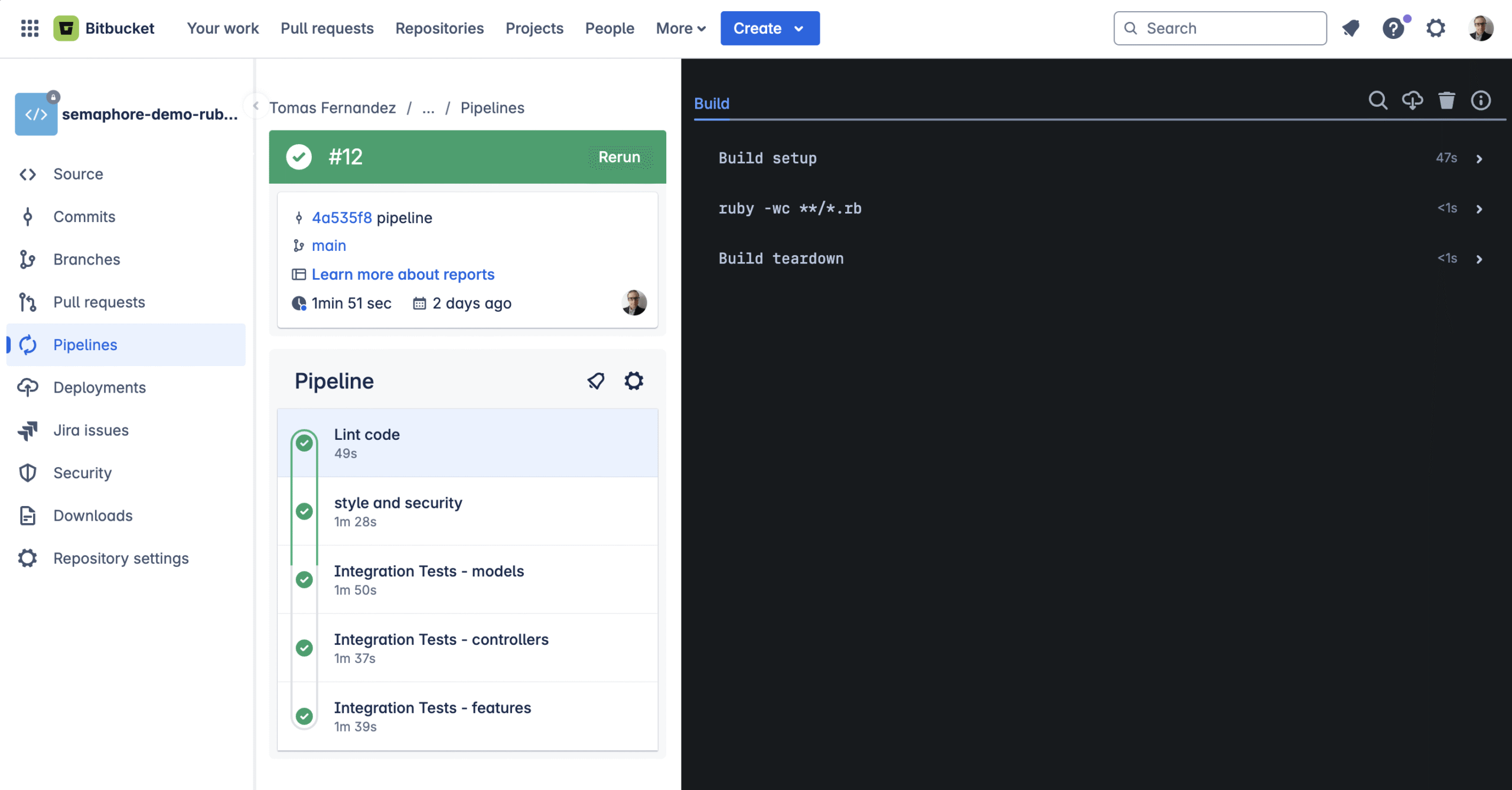

Frequent releases are essential for maintaining secure and relevant services. Teams familiar with continuous integration (CI) and continuous deployment (CD) often release updates tens of times daily. But when changes become rare, the skills and knowledge of how to do this safely fades, increasing the risk of failure and making teams even more hesitant to make updates.

TIP: Maintain operational skills

Create an environment where teams feel safe to deploy updates. Regular releases not only keep services relevant but also help teams keep up the skills needed to manage updates effectively.

Planning for the inevitable downtime

The perception that a service needs to achieve 100% availability prevents teams from preparing for planned downtime. Many services don’t have a ‘shutter’ mechanism to notify users when a service is intentionally offline for maintenance. For example during a database migration. Providing users with a simple, branded message would offer a much better experience than a generic 4xx or 5xx error page if they try to access a service during downtime.

Government digital services are expensive, but the convenience they offer the citizen generally justifies the cost. However, teams often lose sight of just how much they’re spending. A useful approach is to frame costs in relatable terms, like how many nurses could be employed with that money. When you look at it this way, striving for “.999%” availability is almost never worth the price.

TIP: Plan for downtime

When maintenance is needed, inform users in advance with clear messages. This reduces frustration and improves the experience.

How can the government move the needle?

UK government services must pass through a series of service assessments during their development stages – discovery, alpha, beta and live. However, many services remain in beta indefinitely. This isn’t because they’re unfit for live deployment, but because teams fear that funding might be cut once they move to the live stage. If these services were built to meet realistic SLAs, they could be operated at a fraction of the current cost.

The Government Digital Service (GDS) standard includes the principle ‘operate a reliable service’. This states that ‘Users expect to be able to use online services 24 hours a day, 365 days a year.’ However, this phrasing unintentionally sets the expectation of 100% uptime, which is unrealistic. Teams are set up to fail if they aim for this, as most government services simply don’t have the budget to support such reliability.

Instead, the service standard should say: ‘operate a service as reliable as necessary to meet user needs.’ It could include benchmarks like:

| Digital Service | SLA | Allowed downtime |

| Report-littering.gov.uk | 97% | 30 minutes per day |

| Submit your tax return | 99% | 15 minutes per day |

| Submit your tax return (in January) | 99.999% | 24 seconds per day |

Matching service expectations to needs

However, it’s crucial to stress that the juice has to be worth the squeeze. Aiming for additional ‘9s’ in availability should only be pursued when the societal cost of not achieving that reliability outweighs the investment required. For example, if the cost of 0.001% of tax returns being filed late is less than guaranteeing 99.999% uptime, then we should avoid spending more money for such small improvements.

Big players like Amazon or Google have modelled what a 15 minute outage will cost them in lost revenue. The web is filled with advice for attaining near perfect availability based on the models of these tech giants. However, the cost of a short outage to a government service is usually inconvenience or at worst reputational damage..

In my opinion, most government services could tolerate up to 15 minutes of downtime per day without significant consequences. Of course some services are critical – lives may depend on them. But these are the exception, not the rule. The truth is that most government services still have paper, or phone based alternatives, so our yard stick for online service reliability should be grounded in reality, not in comparison to private sector giants like YouTube or Facebook.

TIP: Set realistic goals

Instead of aiming for 100% uptime, put in place realistic SLAs that match your user needs and operational realities. Use benchmarks that reflect the importance of each service.

Graceful degradation

Richard Pope’s Platformland shares a vision of 100s of interconnected services lowering barriers to the UK public and enabling a model where the public is served proactively. However, I fear that this interconnectivity will demand even more unattainable reliability. Instead of pragmatic thinking about how services should fail. The current model for failure is that an error is raised and a Site Reliability Engineer (SRE) is alerted. However, this rarely results in the original user need being fulfilled. Instead the offending code will be fixed and the user will be forced to use an alternative means of realising their intent.

Embracing SLAs

By following some of the tips in this blog, I believe reliability and user satisfaction can be significantly improved. Healthy discussions about the appropriate level of reliability for services will lead to reductions in costs and waste. Ultimately, a positive approach to SLAs will enhance services and better meet the needs of the British public.

The post Why public sector digital can’t afford to shy away from SLAs appeared first on Made Tech.