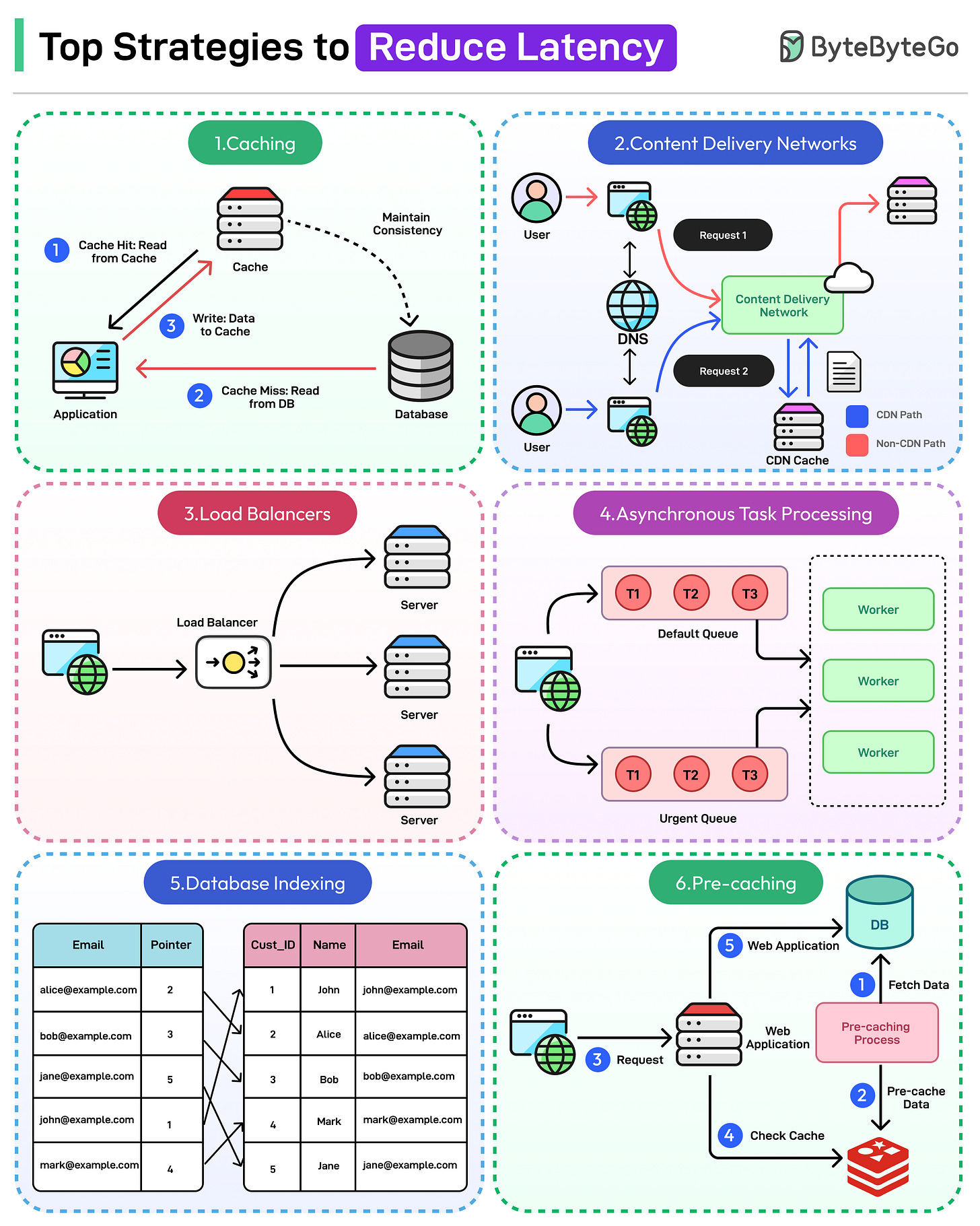

Top Strategies to Reduce Latency

Latency is a fundamental concept to consider when designing any application. It refers to the delay between a user's action and the system's response.

In technical terms, latency measures the time it takes for data to travel from a source to a destination and back. It is usually expressed in milliseconds (ms) and is critical in determining the speed and responsiveness of applications, websites, and networks.

High latency can have a profound impact across business domains:

- User Experience: Slow-loading websites frustrate users and lead to higher bounce rates. A Google study shows that slowing down the search results page by 100 to 400 milliseconds has a measurable impact on the number of searches per user of -0.2% to -0.6%.

- Business Operations: Delays in real-time applications like video conferencing or online collaboration tools can hamper productivity. Due to delayed page loads, e-commerce platforms suffer from reduced customer satisfaction and lower sales. For example, Amazon estimates that a 1-second increase in latency could cost $1.6 billion annually in sales.

- SEO Rankings: Search engines prioritize fast-loading websites in search rankings. For example, Google’s Core Web Vitals places significant weight on metrics like First Input Delay (FID), which is directly tied to latency. High latency increases page load times, negatively affecting SEO and organic traffic.

In this article, we will learn about latency and its types in detail. We will explore top strategies to reduce latency, such as caching, content delivery networks (CDNs), load balancing, asynchronous processing, database indexing, pre-caching, data compression, and connection reuse.

Latency vs Bandwidth vs Throughput

Source: View source