AI is rapidly moving from experimental to operational. Projects often stall because they’ve been built around generic AI models that can be expensive to maintain, hard to integrate and not tailored to what the organisation actually needs. This culminates with teams struggling to move past the testing phase.

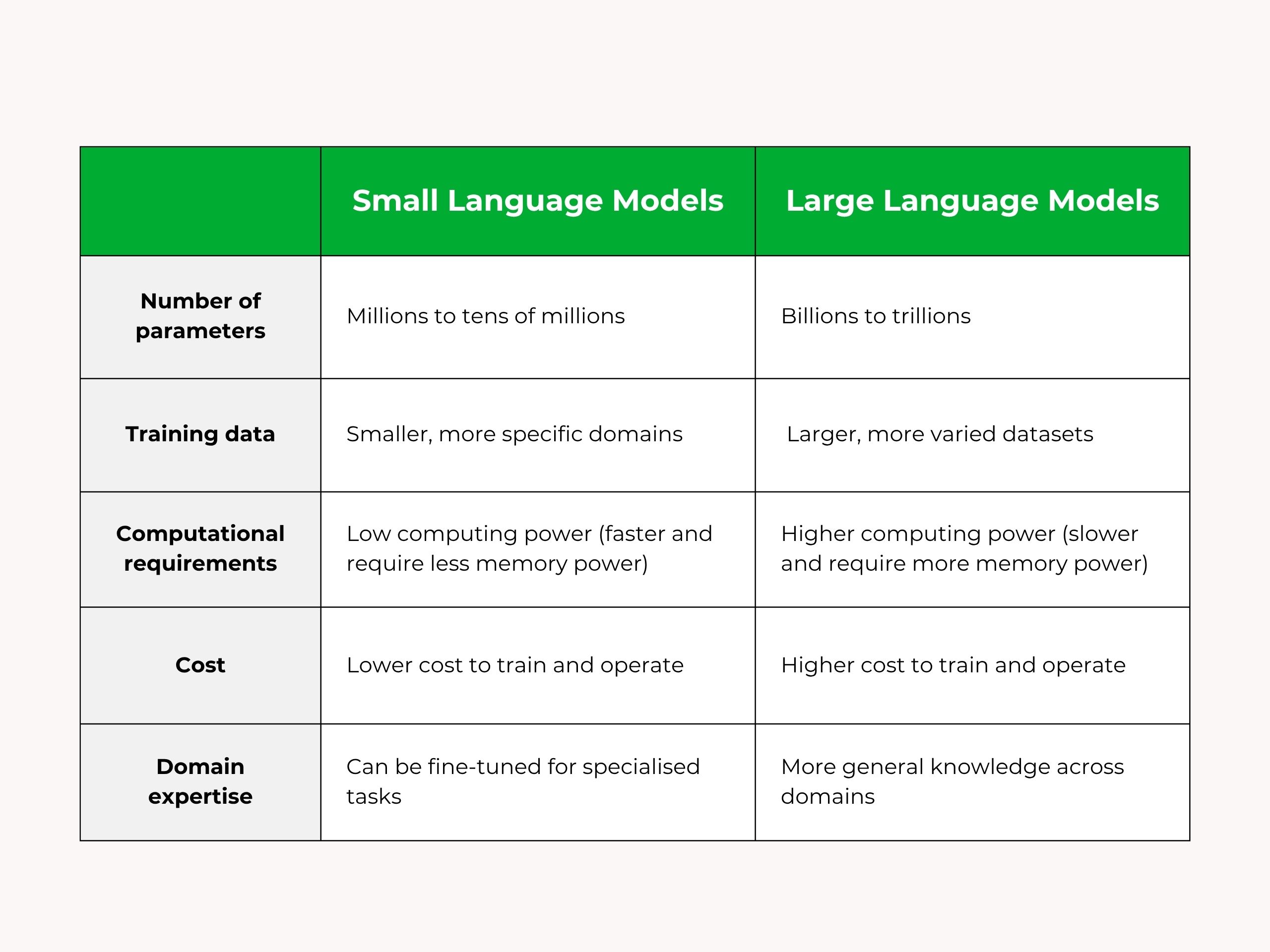

The way around this may be to think smaller. Small Language Models (SLMs) are emerging as a much more sustainable and scalable way to adopt AI. They may not be as ‘powerful’ as Large Language Models (LLMs) but they offer unique benefits that allow projects to escape the testing phase and solve real world problems.

Bigger isn’t always better when it comes to AI

A lot of clients come to us with the assumption that more power equals better results. The thinking is: if we use the biggest, most powerful model out there, we’ll be covered. But in practice, those LLMs bring complications along with their power and size , especially for well-defined, specific use cases.

Think of it like driving through London. If you try to do it in a van or lorry – yes, it’s big and powerful – but is it the right choice for narrow urban streets? Not really. In that environment, a small city car that’s lighter, less powerful and more affordable is actually the better idea.

SLMs are designed to do one thing well. They’re faster to train, are cheaper to deploy and easier to iterate. You can get 80% of the results with 20% of the resources. And that’s often more than enough, particularly when you’re building a proof of concept or delivering something operational in a short timeframe.

A real-world example: Helping the Met Office turn feedback into forecasts

We saw this approach pay off recently in a project with the Met Office. They had a clear challenge: over 1.3 million user-submitted comments that needed to be categorised and analysed. That workload was falling on one analyst. They weren’t looking for a fancy AI assistant. They just needed a faster, smarter way to surface the right information from their ever growing dataset.

So we built a solution using TinyLLaMA, a small model just 638MB in size (just under one hour of standard-definition Netflix streaming). It didn’t need to understand everything. It just needed to take a user prompt like:

“Show me comments about the beach between 01/04/2025 and 05/04/2025”

…and turn it into a structured query.

That output would then search a vector database, and within seconds, return a bespoke dataset related to the topic of the query. No overkill. No unnecessary complexity. Just a tool that did one thing well and did it fast.

And the great thing? We didn’t need a lot of infrastructure or resources to make it happen. We deployed it via AWS, but can run it locally too if needed.

Why Small Language Models work

What made that project successful wasn’t just the tech. It was how specific we were with the problem and the data. We didn’t train the model on everything, we trained it on exactly what it needed to perform the task.

Too often in AI, we see teams default to ‘the more data the better’. But actually, data quality and relevance beat data volume every time. In this case, we used real prompts from users to train the model. That specificity meant out of the box we could train, refine and implement much quicker than with a LLM and get our model trained on our specific use case more quickly.

Ease of use = Better adoption

The other big win here was ease of use, giving a powerful tool to users without them needing to know anything about vector searches or language models. They just asked a question in plain English to a Graphical User Interface (GUI) and they got a bespoke data set. That’s the kind of user experience that drives adoption. We’re not asking them to learn how the engine works. We’re handing them the keys and letting them drive.

Building AI responsibly with small language models

Another reason I’m a big advocate for SLMs is that they’re easier to govern, can be implemented with ethics at their core and have less of an environmental impact on the planet.

Because the model is small, you can see exactly what’s going in and coming out. That means it’s easier to:

- Discover bias behaviour: With tighter control of the data going into the model we can be proactive in auditing and tracking data.

- Understand the output: SLMs are less of a black box than LLMs, thanks to their focused training and lower complexity. This makes their output easier to explain and builds greater confidence among stakeholders.

- Comply with GDPR: With the use of domain specific data sets, it’s much easier to meet GDPR standards and remove specific data from training the model.

- Be greener and more sustainable: SLMs are smaller across the board, this means; less computational resources, significantly lower energy consumption and reduced carbon emission. We can help clients cut costs and achieve their emission objectives.

Think fit for purpose

The calculator didn’t replace the accountant, it became a tool in their arsenal. When AI is built around a specific problem and designed with users in mind, it becomes a tool people want to use, not one they fear.

That’s the power of small models. They let you move fast, stay focused and build something that solves users’ real world problems.

If you’re thinking about scaling AI in your organisation, I’d encourage you to ask:

- Do you know what problem you’re actually trying to solve?

- Do you have the right data – not just lots of it?

- Do you need a general-purpose model, or one that’s tailored to your needs?

If you’ve got a clear use case, you don’t need to go big. Small, focused AI can get you there faster and with less risk.

Take a look at our data and AI pages for more on what we have to offer.

The post Scaling AI responsibly with Small Language Models (SLMs) appeared first on Made Tech.